Provably Forgotten Signatures: Adding Privacy to Digital Identity

We can enhance existing digital identity systems to support an important privacy feature known as “unlinkability:” sharing attributes without attribution.

In September 2024, I had the honor to present this proposal for Provably Forgotten Signatures at NIST's 2024 Workshop on Privacy-Enhancing Cryptography. You can find the slides from the presentation linked here.

At SpruceID, our mission is to let users control their data across the web. We build systems based on Verifiable Digital Credentials (VDCs) to make the online and offline worlds more secure, while protecting the privacy and digital autonomy of individuals.

Developing models to implement this VDC future requires carefully thinking through every risk of the new model–including risks in the future. One of the edge-case risks privacy researchers have identified is sometimes known as “linkability.”

Linkability refers to the possibility of profiling people by collating data from their use of digital credentials. This risk commonly arises when traceable digital signatures or identifiers are used repeatedly, allowing different parties to correlate many interactions back to the same individual, thus compromising privacy. This can create surveillance potential across societies, whether conducted by the private sector, state actors, or even foreign adversaries.

In this work, we explore an approach that adds privacy by upgrading existing systems to prevent linkability (or “correlation”) and instead of overhauling them entirely. It aims to be compatible with already-deployed implementations of digital credential standards such as ISO/IEC 18013-5 mDL, SD-JWT, and W3C Verifiable Credentials, while also aligning with cryptographic security standards such as FIPS 140-2/3. It is compatible with and can even pave the way for future privacy technologies such as post-quantum cryptography (PQC) or zero-knowledge proofs (ZKPs) while unlocking beneficial use cases today.

Why This Matters Now

Governments are rapidly implementing digital identity programs. In the US, 13 states already have live mobile driver’s license (mDL) programs, with over 30 states considering them, and growing. Earlier this year, the EU has approved a digital wallet framework which will mandate live digital wallets across its member states by 2026. This is continuing the momentum of the last generation of digital identity programs with remarkable uptake, such as India’s Aadhaar which is used by over 1.3 billion people. However, it is not clear that these frameworks plan for guarantees like unlinkability in the base technology, yet the adoption momentum increases.

Some think that progress on digital identity programs should stop entirely until perfect privacy is solved. However, that train has long left the station, and calls to dismantle what already exists, has sunk costs, and seems to function may fall on deaf ears. There are indeed incentives for the momentum to continue: demands for convenient online access to government services or new security systems that can curb the tide of AI-generated fraud. Also, it’s not clear that the best approach is to design the “perfect” system upfront, without the benefit of iterative learning from real-world deployments.

In the following sections, we examine two privacy risks that may already exist in identity systems today, and mitigation strategies that can be added incrementally.

Digital ID Risk: Data Linkability via Collusion

One goal for a verifiable digital credential system is that a credential can be used to present only the necessary facts in a particular situation, and nothing more. For instance, a VDC could prove to an age-restricted content website that someone is over a certain age, without revealing their address, date of birth, or full name. This ability to limit disclosures allows the use of functional identity, and it’s one big privacy advantage of a VDC system over today’s identity systems that store a complete scan of a passport or driver’s license. However, even with selective disclosure of data fields, it is possible to unintentionally have those presentations linkable if the same unique values are used across verifiers.

In our example, if a user proves their age to access an age-restricted content website (henceforth referred to simply as “content website”), and then later verifies their name at a bank, both interactions may run the risk of revealing more information than the user wanted if the content website and bank colluded by comparing common data elements they received. Although a check for “over 18 years old” and a name don’t have any apparent overlap, there are technical implementation details such as digital signatures and signing keys that, when reused across interactions, can create a smoking gun.

Notably, the same digital signature is uniquely distinguishable, and also new signatures made from the same user key can be correlated. This can all work against the user to reveal more information than intended.

Verifier-Verifier Collusion

To maximize privacy, these pieces of data presented using a VDC should be “unlinkable.” For instance, if the same user who’d proven their age at a content website later went to a bank and proved their name, no one should be able to connect those two data points to the same ID holder, not even if the content website and the bank work together. We wouldn’t want the bank to make unfair financial credit decisions based on the perceived web browsing habits of the user.

However, VDCs are sometimes built on a single digital signature, a unique value that can be used to track or collate information about a user if shared repeatedly with one or more parties. If the content website in our example retains the single digital signature created by the issuing authority, and that same digital signature was also shared with the bank, then the content website and the bank could collude to discover more information about the user than what was intended.

The case where two or more verifiers of information can collude to learn more about the user is known as verifier-verifier collusion and can violate user privacy. While a name-age combination may seem innocuous, a third-party data collector could, over time, assemble a variety of data about a user simply by tracking their usage of unique values across many different verifiers, whether online or in-person. At scale, these issues can compound into dystopian surveillance schemes by allowing every digital interaction to be tracked and made available to the highest bidders or an unchecked central authority.

Cycling Signatures to Prevent Verifier-Verifier Collusion

Fortunately, a simple solution exists to help prevent verifier-verifier collusion by cycling digital signatures so that each is used only once. When a new VDC is issued by a post office, DMV, or other issuer, it can be provisioned not with a single signature from the issuing authority that produces linkable usage, but with many different signatures from the issuing authority. If user device keys are necessary for using the VDC, as in the case of mobile driver’s licenses, several different keys can be used as well. A properly configured digital wallet would then use a fresh signature (and potentially a fresh key) every time an ID holder uses their VDC to attest to particular pieces of information, ideally preventing linkage to the user through the signatures.

Using our earlier example of a user who goes to a content website and uses their VDC to prove they are over 18, the digital wallet presents a signature for this interaction, and doesn’t use that signature again. When the user then visits their bank and uses a VDC to prove their name for account verification purposes, the digital wallet uses a new signature for that interaction.

Because the signatures are different across each presentation, the content website and the bank cannot collude to link these two interactions back to the same user without additional information. The user can even use different signatures every time they visit the same content website, so that the content website cannot even tell how often the user visits from repeated use of their digital ID.

Issuer-Verifier Collusion

A harder problem to solve is known as “issuer-verifier” collusion. In this scenario, the issuer of an ID–or, more likely, a rogue agent within the issuing organization–remembers a user’s unique values (such as keys or digital signatures) and, at a later time, combines them with data from places where those keys or signatures are used. This is possible even in architectures without “phone home” because issuing authorities (such as governments or large institutions) often have power over organizations doing the verifications, or have been known to purchase their logs from data brokers. Left unsolved, the usage of digital identity attributes could create surveillance potential, like leaving a trail of breadcrumbs that can be used to re-identify someone if recombined with other data the issuer retains.

Approaches Using Zero-Knowledge Proofs

Implementing advanced cryptography for achieving unlinkability, such as with Boneh–Boyen–Shacham (BBS) signatures in decentralized identity systems, has recently gained prominence in the digital identity community. These cryptographic techniques enable users to demonstrate possession of a signed credential without revealing any unique, correlatable values from the credentials.

Previous methods like AnonCreds and U-Prove, which rely on RSA signatures, paved the way for these innovations. Looking forward, techniques such as zk-SNARKs, zk-STARKs, which when implemented with certain hashing algorithms or primitives such as lattices can support requirements for post-quantum cryptography, can offer potential advancements originating from the blockchain ecosystem.

However, integrating these cutting-edge cryptographic approaches into production systems that meet rigorous security standards poses challenges. Current standards like FIPS 140-2 and FIPS 140-3, which outline security requirements for cryptographic modules, present compliance hurdles for adopting newer cryptographic algorithms such as the BLS 12-381 Curve used in BBS and many zk-SNARK implementations. High assurance systems, like state digital identity platforms, often mandate cryptographic operations to occur within FIPS-validated Hardware Security Modules (HSMs). This requirement necessitates careful consideration, as implementing these technologies outside certified HSMs could fail to meet stringent security protocols.

Moreover, there's a growing industry shift away from RSA signatures due to concerns over their long-term security and increasing emphasis on post-quantum cryptography, as indicated by recent developments such as Chrome's adoption of post-quantum ciphers.

Balancing the need for innovation with compliance with established security standards remains a critical consideration in advancing digital identity and cryptographic technologies.

A Pragmatic Approach for Today: Provably Forgotten Signatures

Given the challenges in deploying zero-knowledge proof systems in today’s production environments, we are proposing a simpler approach that, when combined with key and signature cycling, can provide protection from both verifier-verifier collusion and issuer-verifier collusion by using confidential computing environments: the issuer can forget the unique values that create the risk in the first place, and provide proof of this deletion to the user. This is implementable today, and would be supported by existing hardware security mechanisms that are suitable for high-assurance environments.

It works like this:

- During the final stages of digital credential issuance, all unique values, including digital signatures, are exclusively processed in plaintext within a Trusted Execution Environment (TEE) of confidential computing on the issuer’s server-side infrastructure.

- Issuer-provided data required for credential issuance, such as fields and values from a driver’s license, undergoes secure transmission to the TEE.

- Sensitive user inputs, such as unique device keys, are encrypted before being transmitted to the TEE. This encryption ensures that these inputs remain accessible only within the secure confines of the TEE.

- Within the TEE, assembled values from both the issuer and user are used to perform digital signing operations. This process utilizes a dedicated security module accessible solely by the TEE, thereby generating a digital credential payload.

- The resulting digital credential payload is encrypted using the user’s device key and securely stored within the device’s hardware. Upon completion, an attestation accompanies the credential, verifying that the entire process adhered to stringent security protocols.

This approach ensures:

- Protection Against Collusion: By employing confidential computing and strict segregation of cryptographic operations within a TEE, the risk of verifier-verifier and issuer-verifier collusion is mitigated.

- Privacy and Security: User data remains safeguarded throughout the credential issuance process, with sensitive information encrypted and managed securely within trusted hardware environments.

- Compliance and Implementation: Leveraging existing hardware security mechanisms supports seamless integration into high-assurance environments, aligning with stringent regulatory and security requirements.

By prioritizing compatibility with current environments instead of wholesale replacement, we propose that existing digital credential implementations, including mobile driver’s licenses operational in 13 states and legislatively approved in an additional 18 states, could benefit significantly from upgrading to incorporate this technique. This upgrade promises enhanced privacy features for users without necessitating disruptive changes.

New Approach, New Considerations

However, as with all new approaches, there are some considerations when using this one as well. We will explore a few of them, but this is not an exhaustive list.

The first consideration is that TEEs have been compromised in the past, and so they are not foolproof. Therefore, this approach is best incorporated as part of a defense-in-depth strategy, where there are many layered safeguards against a system failure. Many of the critical TEE failures have resulted from multiple things that go wrong, such as giving untrusted hosts access to low-level system APIs in the case of blockchain networks, or allowing arbitrary code running on the same systems in the case of mobile devices.

One benefit of implementing this approach within credential issuer infrastructures is that the environment can be better controlled, and so more forms of isolation are possible to prevent these kinds of vulnerability chaining. Issuing authorities are not likely to allow untrusted hosts to federate into their networks, nor would they allow arbitrary software to be uploaded and executed on their machines. There are many more environmental controls possible, such as intrusion detection systems, regular patching firmware, software supply chain policies, and physical security perimeters.

We are solving the problem by shifting the trust model: the wallet trusts the hardware (TEE manufacturer) instead of the issuing authority.

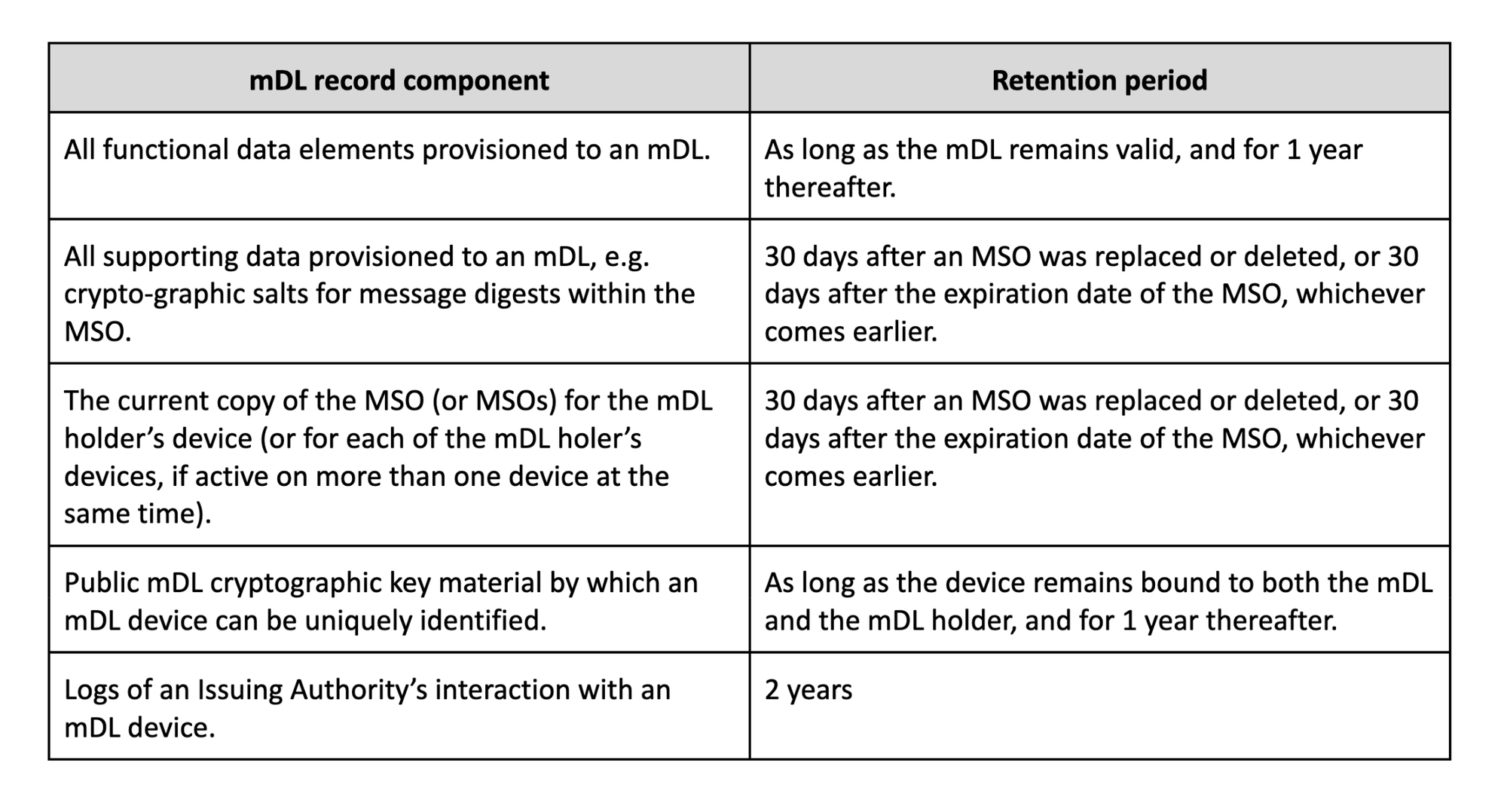

Another consideration is that certain implementation guidelines for digital credentials recommend retention periods for unique values for issuing authorities. For example, AAMVA’s implementation guidelines include the following recommendations for minimum retention periods:

To navigate these requirements, it is possible to ensure that the retention periods are enforced within the TEE by allowing for deterministic regeneration of the materials only during a fixed window when requested by the right authority. The request itself can create an auditable trail to ensure legitimate usage. Alternatively, some implementers may choose to override (or update) the recommendations to prioritize creating unlinkability over auditability of certain values that may be of limited business use.

A third consideration is increased difficulty for the issuing authority to detect compromise of key material if they do not retain the signatures in plaintext. To mitigate this downside, it is possible to use data structures that are able to prove set membership status (e.g., was this digital signature issued by this system?) without linking to source data records or enumeration of signatures, such as Merkle trees and cryptographic accumulators. This allows for the detection of authorized signatures without creating linkability. It is also possible to encrypt the signatures so that only the duly authorized entities, potentially involving judicial processes, can unlock the contents.

Paving the Way for Zero-Knowledge Proofs

We believe that the future will be built on zero-knowledge proofs that support post-quantum cryptography. Every implementation should consider how it may eventually transition to these new proof systems, which are becoming faster and easier to use and can provide privacy features such as selective disclosure across a wide variety of use cases.

Already, there is fast-moving research on using zero-knowledge proofs in wallets to demonstrate knowledge of unique signatures and possibly the presence of a related device key for payloads from existing standards such as ISO/IEC 18013-5 (mDL), biometric templates, or even live systems like Aadhar. In these models, it’s possible for the issuer to do nothing different, and the wallet software is able to use zero-knowledge cryptography with a supporting verifier to share attributes without attribution.

These “zero-knowledge-in-the-wallet” approaches require both the wallet and the verifier to agree on implementing the technology, but not the issuer. The approach outlined in this work requires only the issuer to implement the technology. They are not mutually exclusive, and it is possible to have both approaches implemented in the same system. Combining them may be especially desirable when there are multiple wallets and/or verifiers, to ensure a high baseline level of privacy guarantee across a variety of implementations.

However, should the issuer, wallet, and verifier (and perhaps coordinating standards bodies such as the IETF, NIST, W3C, and ISO) all agree to support the zero-knowledge approach atop quantum-resistant rails, then it’s possible to move the whole industry forward while smoothing out the new privacy technology’s rough edges. This is the direction we should go towards as an industry.

Tech Itself is Not Enough

While these technical solutions can bring enormous benefits to baseline privacy and security, they must be combined with robust data protection policies to result in safe user-controlled systems. If personally identifiable information is transmitted as part of the user’s digital credential, then by definition they are correlatable and privacy cannot be addressed at the technical protocol level, and must be addressed by policy.

For example, you can’t unshare your full name and date of birth. If your personally identifiable information was sent to an arbitrary computer system, then no algorithm on its own can protect you from the undercarriage of tracking and surveillance networks. This is only a brief sample of the kind of problem that only policy is positioned to solve effectively. Other concerns range from potentially decreased accessibility if paper solutions are no longer accepted, to normalizing the sharing of digital credentials towards a “checkpoint society.”

Though it is out of scope of this work, it is critical to recognize the important role of policy to work in conjunction with technology to enable a baseline of interoperability, privacy, and security.

The Road Ahead

Digital identity systems are being rolled out in production today at a blazingly fast pace. While they utilize today’s security standards for cryptography, their current deployments do not incorporate important privacy features into the core system. We believe that ultimately we must upgrade digital credential systems to post-quantum cryptography that can support zero-knowledge proofs, such as ZK-STARKs, but the road ahead is a long one given the timelines it takes to validate new approaches for high assurance usage, especially in the public sector.

Instead of scorching the earth and building anew, our proposed approach can upgrade existing systems with new privacy guarantees around unlinkability by changing out a few components, while keeping in line with current protocols, data formats, and requirements for cryptographic modules. With this approach, we can leave the door open for the industry to transition entirely to zero-knowledge-based systems. It can even pave the path for them by showing that it is possible to meet requirements for unlinkability, so that when policymakers review what is possible, there is a readily available example of a pragmatic implementation.

We hope to collaborate with the broader community of cryptographers, public sector technologists, and developers of secure systems to refine our approach toward production usage. Specifically, we wish to collaborate on:

- Enumerated requirements for TEEs around scalability, costs, and complexity to implement this approach, so that commercial products such as Intel SGX, AMD TrustZone, AWS Nitro Enclaves, Azure Confidential Computing, IBM Secure Execution, or Google Cloud Confidential Computing can be considered against those requirements.

- A formal paper with rigorous evaluation of the security model using data flows, correctness proofs, protocol fuzzers, and formal analysis.

- Prototyping using real-world credential formats, such as ISO/IEC 18013-5/23220-* mdocs, W3C Verifiable Credentials, IMS OpenBadges, or SD-JWTs.

- Evaluation of how this approach meets requirements for post-quantum cryptography.

- Drafting concise policy language that can be incorporated into model legislation or agency rulemaking to create the requirement for unlinkability where deemed appropriate.

If you have any questions or interest in participation, please get in touch. I will be turning this blog post into a paper by adding reviews of related work, explanations, and some other key sections.

About SpruceID: SpruceID is building a future where users control their identity and data across all digital interactions. We believe that instead of people signing into platforms, platforms should sign into people’s data vaults. Our products power privacy-forward verifiable digital credentials solutions for businesses and governments, with initiatives like state digital identity (including mobile driver’s licenses), digital learning and employment records, and digital permits and passes.